Introduction

One of the main goals of the project TISS (a detailed description of TISS can be found in [1]) is to reengineer and unify a large part of the information systems of the Vienna University of Technology (TUWIS). The legacy databases that have to be reverse engineered have a wide range of deficits making analysis and migration of the data structures and their contents difficult. In addition documentation for the database is scarce and consists mainly of comments for some tables and columns. Therefore a database reverse engineering process has been defined that aims at providing the necessary information about the legacy databases to successfully perform the data migration.

Problem Definition

TUWIS is a highly heterogeneous system. As almost every legacy system that has grown and evolved for forty years it has been modified and extended by numerous different developers with different technical skill levels and styles. To summarize briefly the legacy system to be reverse engineered actually consists of two subsystems which are tightly connected and highly interdependent. TUWIS, the older part, is written in COBOL and PL/SQL with a backend based on an Oracle DBMS. The newer part, TUWIS++, is a web built on the Zope application server, with a separate Oracle database. The two subsystems are mostly synchronized via their databases, which run on the same database server and duplicate large parts of the business data. Both systems interface in a wide variety of ways with neighboring systems. These neighboring systems range from information systems of government agencies, two SAP systems to multiple smaller specialized systems and tools.

Understanding the environment in which the database to be reverse engineered operates is essential. Often it is the only way to truly understand the structure of the database, if you analyze the data contained in it as well as the dataflow.

The identified deficits of the legacy databases the project team has to deal with are described in the following sections.

Missing Documentation

Original Cobol Legacy System (TUWIS)

Missing documentation is one of the key issues, a problem encountered in virtually all reengineering projects [2]. The original TUWIS user documentation consists of approximately 100 Microsoft Word files. Although primarily aimed at the users of TUWIS it contains valuable information about the database tables. This includes the table name as well as a table listing all columns, their data type and a short per column description. It does not, however, include more specific information like whether a certain column can be left empty or not. Basically this documentation is very similar to Oracle’s table documentation that can be retrieved as HTML provided that the column comments have been entered into the database. Unfortunately the column comments have not been fully entered into the database, leaving the two documentation locations largely out of sync.

Another problem with the old TUWIS documentation is that the development of TUWIS++ has not been reflected. It does not reflect any changes made to the old schema during and after the development.

Web-based Extension (TUWIS++)

Technical documentation for the newer, web based part of TUWIS is even scarcer. Unfortunately attempts to document the system in detail have not been successful. There is a collection of information specific to project management issues. This includes mostly out of date information about the general direction and goals of the project (developing and maintaining TUWIS++). An incomplete Wiki proved to be useful for the architecture overview, its relevance for the project TISS is limited at best. Besides, the data also seems to be fairly out of date.

But there is a part of the TUWIS++ documentation that is most relevant for TISS. It provides additional informa-tion about the data of seven database tables. Compared to the total number of tables, this seems to be rather limited, but the tables documented are absolutely vital to the system. It provides a per column description of the data contents, which is an almost exact duplicate of the column comments provided directly in the database. Additionally it contains quite detailed information about the purpose of the table and its use. For the tables that can be found both in the TUWIS and TUWIS++ schema, it also provides information on how the data is synchronized and it is also the most recent, with the last modifications not dating back more than a year.

Database Comments (Data Dictionary)

The Oracle DBMS, like many other RDBMS products, provides the possibility to store a comment on each table and column directly in the database. This information is stored along with some information automatically gathered by the RDBMS system directly in the system. It is usually referred to as a data dictionary. This mechanism has been used by the developers on both schemas, but to different extents. In the TUWIS database schema not even a fifth of the tables have a comment associated with them (see Table 1).

In the second schema (TUWIS++) the commenting feature has been used a bit more extensively. A little more than a quarter of all tables in this schema have comments. Looking at the overall picture of the combined schemas, a little less than a quarter of all tables have comments associated with them.

When it comes to comments on table columns the situation does not look that different (see Table 2). In fact the commenting feature has been hardly used at all for the old TUWIS schema. The new schema has been documented significantly better. About a third of all columns have been commented. Nonetheless, looking at the complete picture of the combined schemas gives a rather sobering view, as the high degree of documentation of the TUWIS++ schema is outweighed by the fact that the old TUWIS schema has significantly more (uncommented) columns.

Oracle provides procedures to generate up to date documentation out of the information stored in the database. This way it is easy to provide a comprehensive document, like a collection of HTML files that correctly describe the content of a schema. As the comment information is transparently stored directly in the database in regular database tables it is also easy to generate or export the documentation information in a custom format.

|

|

Missing Normalization

In 1970 Codd [3] devised a set of normal forms that database designers should adhere to. The main goal of normalizing a database schema is to avoid inconsistent data in the database. The Boyce Codd Normal Form (BCNF) [4], although not the strictest variant of normal forms, is a de facto industry standard today. The normal forms define a set of rules that are intended to guide the designer of a data model towards a model that will prohibit database constructs that allow the storing of inconsistent data. Following this model will ensure that the data collected over the years will be consistent and complete enough to be useable later on. Generally not conforming to the normal forms can also be seen as a feature, mainly to achieve greater performance as described in [5]. Denormalization, as the process of intentionally introducing some violations to the normal forms for performance reasons is called, always starts with a completely normalized data model though. This is a risky approach that requires careful planning, documentation and evaluation whether the benefits outweighs the risks. In addition it imposes a certain responsibility on the developers of the application to enforce the constraints that have not been explicitly declared in the database schema in the higher layers of the application.

In the TUWIS and TUWIS++ data models virtually all of these rules have been violated. Yet this cannot be seen as the fault of the database designer. The design is a result of the migration of the legacy COBOL data storage to a relational database. A good example of a violation of normal forms is the way personal data is stored in the two systems. There are two tables representing personal data (e.g. name, date of birth, address, etc.), one for employees and one for students. Now the problem is that the two groups of persons are not disjoint. A student can be employed by the university and all the same a university employee might register as a student. As soon as a person is student and employee, two records partly containing the same data have to be maintained and kept consistent. The integrity of the data therefore cannot be enforced by the DBMS as it lacks the necessary information. Another violation of the First Normal Form (1NF) can be found in the employee table. A standardized email address is stored for each employee. In addition an employee can enter an additional email address via a university-wide address book application. All email addresses are stored in two fields, EMAIL1 and EMAIL2 of the same table. As the 1NF prohibits the use of “repeating groups” [6], this is a clear violation.

Data Model Deprecation

This section discusses the problem of obsolete database objects cluttering an overall view of the database. At first this seems like a rather easy problem to come by – identify unnecessary tables and document the findings so developers later know that these tables can be ignored (or removed from the production database, depending on the exact goal of the analysis).

Exactly therein the most difficult part can be found. Missing documentation makes solving this problem much harder than it needs to be. Actually, the two problems pretty much go hand in hand. While it would be fairly easy to remove old unused tables in a well documented environment it is a rather tough task if no reliable information is provided.

The basic problem is, as with missing documentation, the fact that a common policy or process for changes is not implemented or, if implemented, not followed by the developers. Many of the deprecated tables look like temporary tables, either created as a backup before running a certain script or simply used for some complex one time evaluations of the business data that needed a temporary storage. Some simple measures would have avoided the problem.

First of all, it is a best practice policy that temporary tables do not go into the same schema as the business data.

It would be rather easy for the developer to add a short note as a comment on the table indicating that this table does not contain business data.

Third, another way to mark tables as temporary would have been some common naming convention. Prefixing all temporary table names with something like “TMP_” or the like would have probably been enough too.

None of these measures have been implemented by the team developing and maintaining TUWIS(++). This leaves the new development team with the problem that it will have to tediously reverse engineer this information with the help of a set of fairly unreliable heuristics.

Data – Application Responsibility

This section will cover the problem of data responsibility. Data responsibility is a clear definition of which system is responsible for a certain (subset) of the business data in an environment where many systems share some common data. A good example of such data is the information that is stored about each person or employee. This data will be needed by several systems and it is likely that more than one system will be able to modify the data. This exposes this data to the risk of concurrent modifications.

Usually this problem is solved on the database level. This will only work though, if all data related to a specific business domain is stored in a single database schema. As soon as the data is distributed across several database schemas, conflicting changes can easily be stored by both systems and have to be merged later on. This task of merging conflicting changes (e.g. one person has different home addresses in two different systems) often has to be done manually which is both expensive and inherently error prone. (How should somebody in the human resources department know which home address is correct?)

In TUWIS(++) data responsibility is often not clearly defined. Data gets imported from several different neighboring systems requiring manual intervention for conflicts in multiple cases. The logic behind the data synchronization is frequently hard coded in multiple places including import scripts and database triggers. Usually, this would not affect the reverse engineering of the database itself, but rather the overall task of migrating the whole application. Unfortunately, as the logic defining the data responsibility is partly implemented as database objects, it is necessary to also pay attention to this matter, especially during the migration phase.

Close Coupling

TUWIS is only part of a whole environment of systems that provide and process information. While these systems mainly operate independently from each other and are operated by different parts of the organization they also have to share information.

Since no common method of data exchange is employed, usually each interface between two systems is a separate solution often built on different methods of data exchange using a wide variety of different technologies. As TUWIS is an integral part of the overall system, it has a large amount of these interfaces – a total of approximately 25. Many of these interfaces operate on a similar dataset. So when migrating a set of tables that are used by some of these interfaces from TUWIS to TISS, all of them have to be modified in order to work with the new system. In many cases the migration will result in a complete rewriting of the interface. In some cases modifying or rewriting the interface will not be enough either, as the old interfaces are often highly specific. So in these cases modifications to the neighboring systems on the other end of these interfaces might be necessary.

All of the issues described above, especially the analysis of the old interfaces, necessary for modifying them accordingly, will require detailed information about the underlying database.

Solution

Migrating the data from the legacy database to the newly designed schema can be tedious without any documentation. In order to document the database properly, it is necessary to thoroughly analyze its structure, the use of the specific construct and its contents. The goal therefore is to detect most inconsistencies in the database during the documentation effort. This will greatly facilitate the migration process as the developer can use the documentation as a reliable resource pointing out the inconsistencies that he will later have to take into consideration during the migration phase.

The following process depicted in Figure 1 has been elaborate to reveal the required information on the legacy database. The process is structured into four parts, three of which are designed to be executed sequentially. None the less care has been taken to allow an iterative revisiting of each process step at later times. The fourth part is actually a cross section that is executed in parallel. It is also possible to define this part as a task that is common to all of the three primary parts.

|

Data Structure Extraction

Initially neither the extent nor the complexity of the legacy databases was known. Therefore it was necessary to create a comprehensive overview of the current structure. This step of the process was supported by off the shelf CASE (Computer Aided Software Engineering) tools. These tools, Fabforce DBDesigner41 and a documentation tool provided by the database vendor, were of great help for extracting the meta data (or source physical schema in [7]) describing the database structure. Nevertheless the (meta)data that has been automatically retrieved must be manually checked and, if necessary, completed. Furthermore a lot of more specific database object types will not be captured by any generic tool. Good examples are triggers, which are database objects that execute a procedure if a certain operation is executed on a data row, and synonyms, which are aliases to access a database object under a different name.

In the end the structural information about 346 tables with a total of around 5600 columns was extracted. Furthermore the comments stored directly in the database system were also transferred to a human readable and distributable format.

Database Object Categorization

The next step was to fully categorize the database assets discovered by the previous analysis. Therefore three main categories were defined:

- Relevant business data: all data that has to be migrated to the new system as otherwise a loss of business value would be unavoidable.

- System and implementation specific data: all data that is only needed for the legacy system to operate properly, but will not be migrated.

- Deprecated database assets: all aspects of the legacy databases that do not represent any semantical or operational value to either the legacy or the reengineered system.

The distinction between operational data and deprecated assets is relevant, as the operational aspects of the database still have to be regarded during the reengineering phase. It might not be possible to fully understand existing functionality, which has to be reengineered to the new system, without understanding the respective database areas.

The results of the categorization effort can be seen in Table 3.

|

Usage and Traffic Analysis

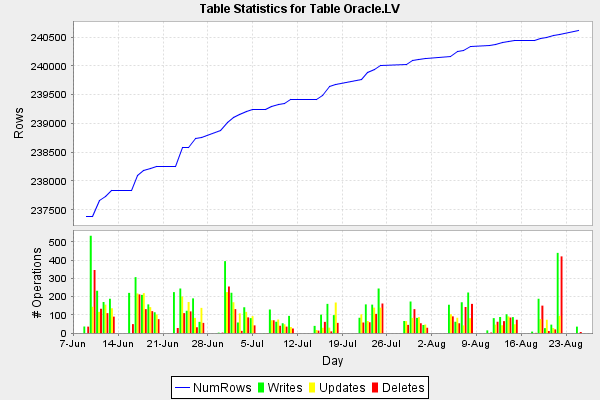

In this step of the analysis process the goal is to get a better impression of how intensively the legacy system is used and therefore get a first idea of how much of a strain will be on the reengineered system. The analysis of the legacy system will give some idea as to which parts should be most carefully designed with respect to performance.

Generally speaking the goal is to gather as much information about the usage of the database as possible. This starts with collecting read and write statistics. Ideally the database system provides means to count the number of reads, writes, updates and deletes on each table. It is essential that this data is gathered over the longest period of time possible. Depending on the legacy system and the business processes it implements, a lot of patterns will only become apparent over an extended period of time. To observe as many usage patterns as possible gathering the statistics for two or three months is essentially the absolute minimum.

The production database was configured to collect the appropriate data and produce a daily report. The data gathered in this way was then collected over more than six months and evaluated on a regular basis.

(Re-)Documentation

As mentioned earlier, unlike the other steps of the process, this task can be executed during or after each step. The presentation of results was deliberately separated from the remaining tasks, as it is frequently elaborated by a different group of developers on the reverse engineering team. In addition, this separation allows for a flexible “just-in-time” delivery of artifacts for concrete purposes.

During the application of this process the following artifacts were produced: One of the most valuable output of the process resulted from the results of the current state analysis and the categorization effort. The first step yielded a graphical representation of all tables of the legacy databases.

Due to the fact that all foreign keys were implicit the tables were more or less randomly distributed though. However, after performing the categorization step, some conclusions could be drawn as to which tables were related to each other. By including the categorization in the diagram from the previous step, the expressiveness of the presentation was significantly increased. By simply arranging the tables by category and at the same time color coding each of the categories, a universal diagram was created that visualized some of the key aspects of the migration effort. It demonstrated to nontechnical stakeholders the fact, that more than 40 percent of the legacy database assets would not be included in the migration.

A second and equally useful output was the web based redocumentation of the legacy database. On the basis of the results of the first two steps in the process a skeleton structure was generated in a wiki system. This skeleton consisted of one page for each table in the database as well as a series of indexes for easy access. The indexes were built representing the categorization. Additional indexes (e.g. sorted alphabetically) were also included. Each page describing a single table was already generated to include all the information available from the previous steps. During the execution of the data reverse engineering step the analysts then entered the newly retrieved information into these pages. After completing the third step of the process, the documentation was a fairly complete and up to date source of information for a wide array of developers, requirements analysts and of course the data migration experts.

Finally the usage and traffic analysis conducted in the last step also yielded results suitable for graphical presentation. A combined graph was developed that showed the number of read, write and delete in a bar chart in the bottom half and displayed the development of the total row count as a continuous line in the upper half. This chart was automatically generated for each database table.

|

Evaluation

Especially the categorization of the database tables including the elicitation of deprecated ones and the web based documentation has proven to be a vital part of the overall effort. On the other hand the usefulness of the effort put into producing a full usage analysis of the database has yet to fully surface. At this point it seems to make the most sense to again focus on the documentation effort to further improve this comprehensive source of information.

References

[1] W. Kleinert, T. Grechenig, T. Költringer, M. Bernhart, A. Knarek, and F. Schönbauer. The making of TISS: Juni 2008. ZIDline, 18:3–8, June 2008.

[2] Michael R. Blaha. Dimensions of database reverse engineering. In WCRE ‘97: Proceedings of the Fourth Working Conference on Reverse Engineering (WCRE ‘97), page 176, Washington, DC, USA, 1997. IEEE Computer Society.

[3] E. F. Codd. A relational model of data for large shared data banks. Commun. ACM, 13(6):377–387, 1970.

[4] E. F. Codd. Recent investigations in relational data base systems. In ACM Pacific, pages 15–20, 1975.

[5] G. Sanders and S. Shin. Denormalization effects on performance of rdbms. In HICSS ‘01: Proceedings of the 34th Annual Hawaii International Conference on System Sciences (HICSS-34)-Volume 3, page 3013, Washington, DC, USA, 2001. IEEE Computer Society.

[6] William Kent. A simple guide to five normal forms in relational database theory. pages 66–71, 1989.

[7] J. Henrard, J.-M. Hick, P. Thiran, and J.-L. Hainaut, “Strategies for data reengineering,” in Proceedings of the Ninth Working Conference on Reverse Engineering 2002, (WCRE ’02), 2002, pp. 211 – 220.